Search through images and videos backed by local LLMs. Private, fast, offline-first — powered by bge-large embeddings, moondream vision, and Whisper transcription.

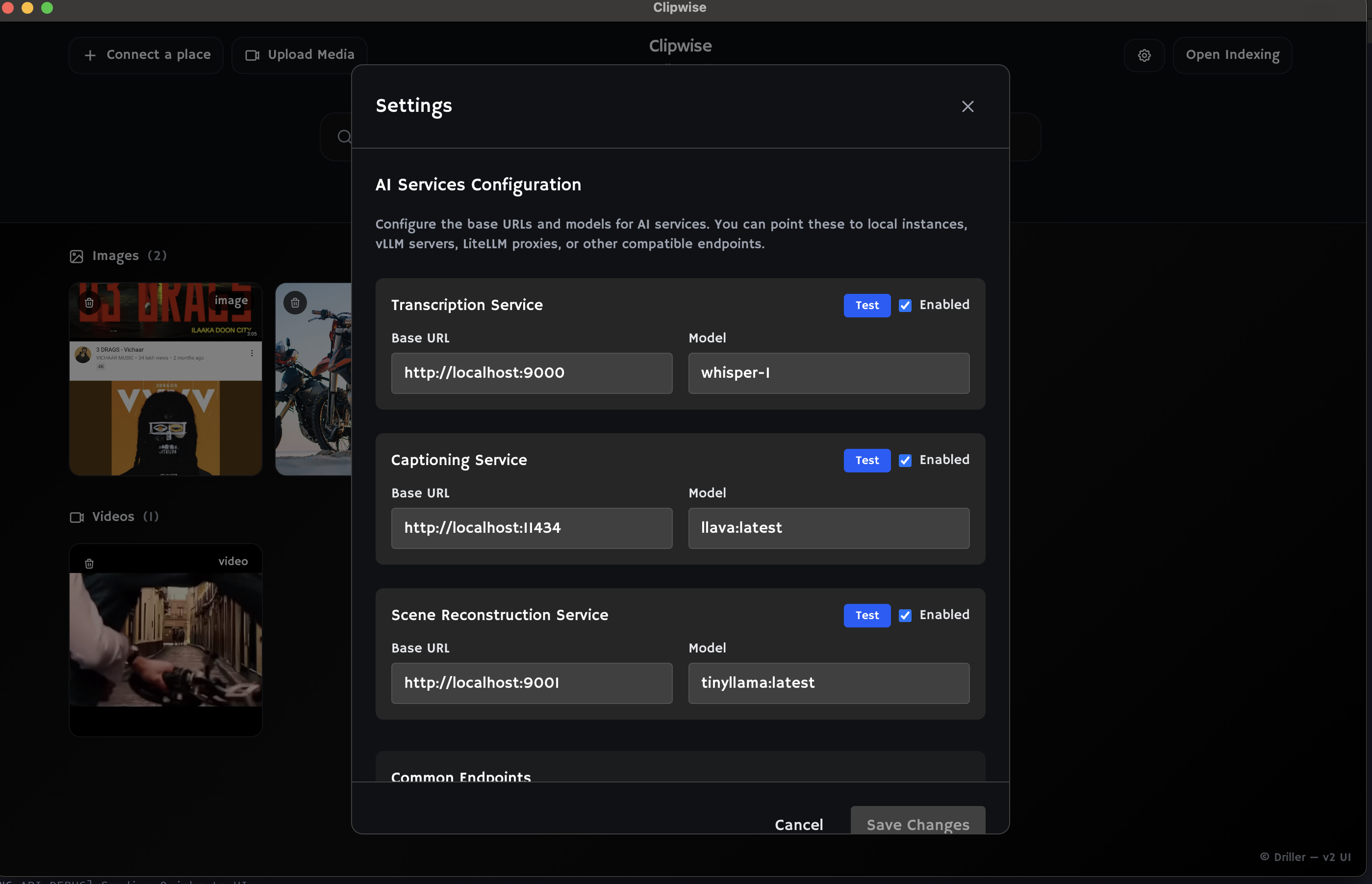

Clipwise requires Docker Compose to run AI services locally. See setup instructions →

Find installers and release notes on the Cinestar SourceForge project page.

All inference runs locally. Your media never leaves your machine.

Embeddings (bge-large), vision (moondream), and Whisper transcripts working together.

Embed once, query instantly with SQLite-Vec. Timecodes and thumbnails included.

One command starts all AI services locally. No complex configuration needed.

All AI models run on your hardware. No cloud services, no data uploads, no tracking.

Powered by open-source models like bge-large, moondream, and Whisper running entirely on your machine.

No analytics, no telemetry, no usage tracking. Your media and search queries remain completely private.

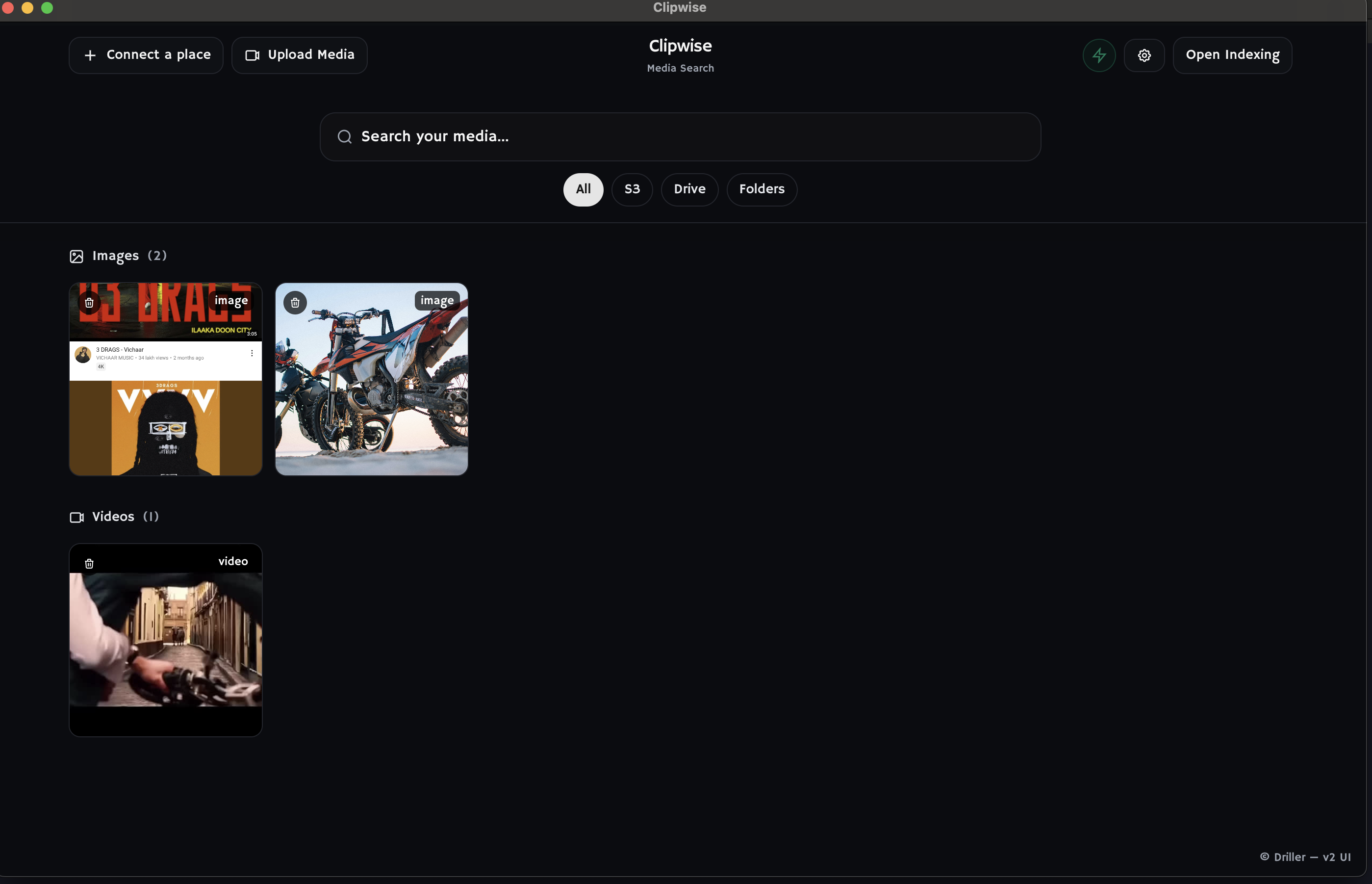

Transform your photo and video collection into a searchable archive. Find "that beach sunset from 2019" or "mom's birthday party" instantly.

Quickly find specific moments in hours of raw footage. Search for "when I dropped the camera" or "the reaction shot" across all your projects.

Manage large video libraries for news, documentaries, or film production. Enable teams to find specific content without expensive cloud services.

Analyze interview footage, focus groups, or observational studies. Search for specific topics, emotions, or behaviors across research datasets.

Adapt Cinestar to your specific needs with a powerful plugin architecture that grows with your requirements.

Google Photos-style face recognition, automatic album creation, smart sharing features, and social media integration.

Advanced metadata extraction, custom transcoding workflows, collaborative editing tools, and enterprise-grade security features.

LDAP integration, audit trails, automated backup systems, compliance reporting, and multi-user access controls.

Built with an open API - create custom plugins for your specific industry needs. From healthcare to education, adapt Cinestar to any use case.

Cinestar leverages state-of-the-art open source models to deliver powerful local AI capabilities without compromising your privacy.

High-quality text and image embeddings for semantic search. Converts your content into searchable vector representations.

Advanced vision-language model that generates detailed captions and descriptions of image and video content.

Efficient language model handling general-purpose tasks including search query processing, content reconstruction, and natural language understanding.

Three simple steps to transform your media into a searchable archive

Drop folders or videos. We transcribe (Whisper), embed frames (BGE), and generate captions (Moondream).

Store vectors in SQLite-Vec. Llama 3.2 processes and organizes content. Everything stays local.

Natural language queries return exact moments with timecodes and thumbnails powered by our AI models.

Start with the open-source core. Add capabilities as you grow.